Building and Deploying AI Agents for Kubernetes

Quickly containerize AI agents, create Kubernetes manifests, and set up service discovery. Monitor with Prometheus. Enhance your AI agents for Kubernetes! Click to deploy effectively now!

What if AI agents weren’t just smart but self-sufficient—scaling up, adapting on the fly, and making decisions faster than you can say ‘Kubernetes’?

AI agents are revolutionizing automation across various sectors, from chatbots to predictive analytics. However, ensuring their efficiency, scalability, and resilience remains a significant challenge. This is where Kubernetes, the gold standard for container orchestration, plays a pivotal role.

Designed to provide AI agents with the flexibility to scale, self-heal, and balance workloads seamlessly, Kubernetes enhances their intelligence and autonomy.

The adoption of Kubernetes for deploying AI agents has grown significantly in recent years. Over 60% of organizations have integrated Kubernetes into their operations, and the Cloud Native Computing Foundation reports an increase in adoption rates from 78% to 96% between 2019 and 2020. Furthermore, the platform boasts a user base of over 5.6 million developers globally, representing 31% of all backend developers.

In this guide, we will walk you through deploying AI agents for Kubernetes, covering aspects such as containerization, scaling, monitoring, and resource management. Whether you’re aiming to optimize performance or ensure rock-solid reliability, you’ll learn how to build AI-driven workloads that practically run themselves.

What are AI Agents?

AI agents are more than just software programs; they are intelligent systems designed to perceive their environment, process data, and take action to achieve specific goals. Whether you’re using them to respond to user queries, make predictions, or automate workflows, these agents operate autonomously to streamline complex tasks.

In Kubernetes environments, AI agents are essential to automation. They manage cloud resources, detect system anomalies, and optimize containerized workloads by intelligently adjusting compute resources. By leveraging AI agents in your Kubernetes ecosystem, you can ensure efficient, resilient operations, making them an integral part of your infrastructure.

However, not all AI agents function the same way.

- Reactive Agents: These are the ‘quick responders’ of the AI world. They react to inputs without memory or learning, like rule-based chatbots.

- Proactive Agents: Anticipate needs and take actions based on predefined rules (e.g., AI recommendation systems).

- Learning-Based Agents: Adapt over time using machine learning, improving decisions based on past data (e.g., AI fraud detection systems).

Now that we know what AI agents are let’s see how Kubernetes takes their potential to the next level by providing the perfect platform for their deployment and scaling.

Streamlining AI Workload Deployment with Kubernetes

Integrating AI agents with Kubernetes allows you to optimize your AI workloads’ scalability, reliability, and efficiency. Kubernetes’ powerful orchestration capabilities make it the perfect solution for managing the lifecycle of AI agents, enabling seamless deployment and scaling across distributed environments.

By containerizing your AI agents, Kubernetes ensures dynamic resource allocation and fault tolerance, allowing your AI systems to adapt and grow with changing demands. This approach enhances performance, making Kubernetes a practical choice for deploying and managing AI workloads effectively.

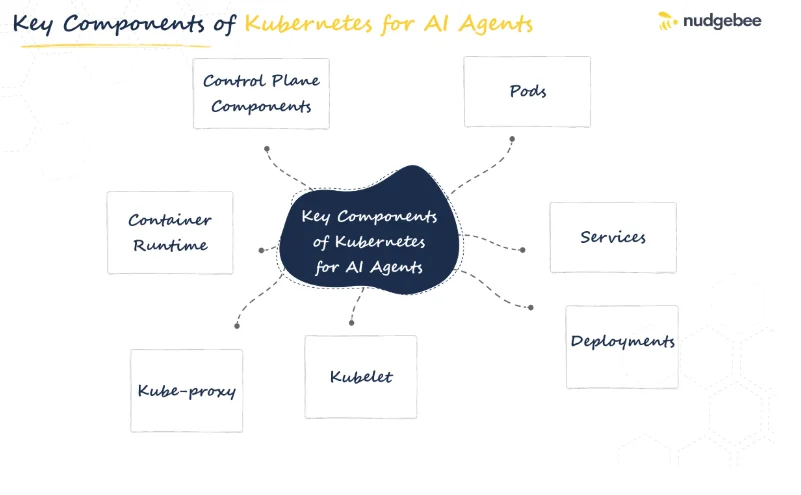

Key Components of Kubernetes for AI Agents

- Pods: The fundamental units of deployment in Kubernetes, each pod can encapsulate one or more containers, allowing for isolation and resource management.

- Services: These abstract access to pods enable communication between various AI agents and other services.

- Deployments: Manage the lifecycle of pods, ensuring that the desired number of replicas is maintained for high availability.

- Kubelet: It is an agent that runs on each worker node in the cluster. Kubelet manages the containers on that node and communicates with the Kubernetes API server.

- Kube-proxy: This component manages network routing for services within the cluster.

- Container Runtime: The container runtime is responsible for running containers. Kubernetes supports various runtimes such as Docker, containerd, and CRI-O.

Control Plane Components:

- Kube-apiserver: The central management entity that exposes the Kubernetes API.

- etcd: A key-value store used for storing configuration data and state information.

- Kube-scheduler: Assigns pods to nodes based on resource availability and constraints.

- Kube-controller-manager: Manages controllers that ensure the desired state of the cluster.

With Kubernetes’ powerful features in mind, let’s walk through the steps to build and deploy AI agents that leverage these capabilities successfully.

Steps to Build AI Agents on Kubernetes

1. Containerization

The first step in deploying an AI agent on Kubernetes is to containerize it using Docker. Here is a sample of Dockerfile for an AI agent:

FROM python:3.8-slim

# Set the working directory

WORKDIR /app

# Copy the requirements file and install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy the AI agent code

COPY . .

# Command to run the AI agent

CMD ["python", "agent.py"]2. Creating Kubernetes Deployment Manifest

Once the AI agent is containerized, you must create a Kubernetes deployment manifest.

Here’s an example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ai-agent

spec:

replicas: 3

selector:

matchLabels:

app: ai-agent

template:

metadata:

labels:

app: ai-agent

spec:

containers:

- name: ai-agent

image: your-docker-repo/ai-agent:latest

ports:

- containerPort: 80803. Service Discovery and Load Balancing

To enable communication between different components, define a Kubernetes Service:

apiVersion: v1

kind: Service

metadata:

name: ai-agent-service

spec:

selector:

app: ai-agent

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: ClusterIP4. Monitoring and Scaling AI Agents

Monitoring is crucial for maintaining optimal performance. Tools like Prometheus and Grafana can be integrated with Kubernetes for resource monitoring. Additionally, you can implement horizontal pod autoscaling:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: ai-agent-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ai-agent

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 805. Deployment Strategies for AI Agents

Implementing Rolling Updates for Minimal Downtime:

Use rolling updates to deploy new versions of your AI agents without affecting availability.

Using Canary Releases for Gradual Rollout:

Test new features with a small subset of users before full deployment, reducing risks associated with new releases.

6. Resource Management

Efficient resource management in Kubernetes ensures optimal performance for AI agents by dynamically allocating computational resources. Key strategies include:

- Setting Resource Requests & Limits: Prevents overuse and stabilizes workloads.

- Using Horizontal Pod Autoscaler (HPA): Scales pods dynamically based on demand.

- Optimizing Scheduling & Node Affinity: Assigns AI tasks to suitable nodes.

- Managing GPU & Accelerators: Allocates hardware efficiently for AI workloads.

7. Monitoring and Logging

Effective monitoring and logging ensure AI agents’ performance and reliability. Key tools and strategies include:

- Prometheus & Grafana for Monitoring prevents overuse and stabilizes workloads.

- Prometheus collects real-time system metrics.

- Grafana visualizes data to track resource usage and performance trends.

ELK Stack for Logging - Elasticsearch – Stores and indexes logs.

- Logstash – Processes and filters log data.

- Kibana – Provides visualization for quick issue detection.

To make the most of your AI agents, it’s crucial to use the right tools. Here’s how NudgeBee can simplify Kubernetes management and keep your AI agents performing at their best.

How NudgeBee Supports the Kubernetes AI Agent Deployment Process?

NudgeBee is a Kubernetes operations platform that automates and optimizes containerized application management. It simplifies scaling, monitoring, and resource allocation, enabling businesses to focus on core operations. With automation and real-time insights, NudgeBee ensures efficient, cost-effective management of cloud-native applications.

Why NudgeBee?

Troubleshooting Agent – Ensuring AI Model Reliability

- Detects and resolves Kubernetes AI agent failures in real-time.

- Reduces downtime by quickly identifying root causes of performance issues.

- Automates log analysis and anomaly detection, improving system stability.

FinOps Agent – Optimizing AI Resource Usage

- Prevents over-provisioning by analyzing AI agent workloads and adjusting resources accordingly.

- Provides real-time cost insights, reducing unnecessary cloud expenses.

- Balances performance and cost efficiency by optimizing infrastructure spending.

CloudOps Agent – Automating AI Deployment & Scaling

- Dynamically manages resource allocation for AI models based on demand.

- Automates Kubernetes scaling to ensure AI agents perform efficiently under varying workloads.

- Enhances operational efficiency by streamlining AI model deployment, monitoring, and maintenance.

Conclusion

Deploying AI agents for Kubernetes provides a robust framework for efficiently managing and scaling intelligent systems. Organizations can achieve scalable, fault-tolerant, and adaptable AI workloads by containerising AI agents and utilising Kubernetes’ orchestration capabilities. Integrating resource management strategies and dynamic scaling ensures that AI systems can meet varying demands while maintaining optimal performance.

As AI advances, Kubernetes will remain an essential tool for deploying cutting-edge applications, offering the flexibility to adapt. It offers solutions to meet the needs of tomorrow’s AI-driven solutions. The future of AI agents in Kubernetes promises even greater automation, resilience, and intelligence, paving the way for more self-sufficient and autonomous systems.

Streamline your AI agent deployment with NudgeBee. With a smarter Kubernetes management platform, you can automate troubleshooting, optimize costs, and enhance operational efficiency. Get started today!