Guide to Chain of Thought (CoT) Prompting with Examples

Ever tried explaining why the sky is blue to a five-year-old? At first, you might be tempted to say, “Because science,” but that won’t cut it. Instead, you break it down: Sunlight is made of different colors, blue light scatters the most, and that’s why the sky looks blue. Congratulations! You’ve just used Chain of Thought (CoT) prompting, not just to educate a curious kid but to structure your own reasoning step by step.

Now, imagine an AI model trying to explain the same thing. Without CoT, it might say, “The sky is blue because it is.” Not very helpful, right? That’s because AI doesn’t naturally break problems into logical steps like humans do. But with CoT prompting, we can train AI to think more like us, whether it’s solving physics problems, explaining quantum entanglement, or even cracking a Sherlock Holmes-style deduction puzzle.

In this guide, we’ll explore how CoT prompting helps AI decompose complex problems, enhance reasoning, and deliver more accurate answers.

Let’s get into it!

Understanding Chain of Thought (CoT) Prompting

Chain of Thought (CoT) prompting is a powerful technique that can help you improve problem-solving by encouraging step-by-step reasoning. Instead of generating an immediate answer, this approach guides you to break down complex problems into smaller, more manageable steps.

By doing so, you enhance accuracy and logical consistency, making it easier to tackle tasks that require critical thinking and sequential reasoning. One of the biggest advantages of CoT prompting is that it helps you structure your thoughts more clearly.

Compared to standard prompting techniques, CoT prompting gives you a more effective way to solve problems. With standard prompting, you might provide an input and expect an immediate answer, but this can sometimes lead to oversimplified or incorrect results. CoT prompting, on the other hand, encourages you to think methodically, leading to more accurate and insightful solutions.

How Chain of Thought (CoT) Prompting Works

CoT prompting takes advantage of large language models’ (LLMs) natural fluency and pattern recognition to enhance problem-solving. CoT prompting taps into the model’s structured thinking capabilities, enhancing its reasoning and decision-making across a wide range of tasks and domains. One of the key strengths of CoT prompting is its ability to mimic human problem-solving.

Just as you would break down a complex problem into smaller, more manageable parts, CoT prompting helps AI decompose tasks into logical steps. This approach makes it easier to tackle multi-step problems, ensuring that each stage builds on the previous one to arrive at a well-reasoned solution.

Another important aspect of CoT prompting is its ability to guide reasoning in a step-by-step manner. Rather than jumping to conclusions, the model follows a structured thought process, analyzing each part of the problem before forming a final answer. This structured reasoning is particularly useful for tasks that require logical deductions, detailed calculations, or multi-layered problem-solving, ultimately leading to more reliable and insightful outputs.

Variants of Chain of Thought (CoT) Prompting

CoT prompting comes in different forms, each designed to improve the reasoning abilities of large language models (LLMs). These variations determine how the model processes information, structures its reasoning, and arrives at conclusions. The three primary types of CoT prompting, Zero-Shot, Few-Shot, and Auto-CoT, offer different levels of guidance and adaptability.

1. Zero-Shot CoT: Guiding Reasoning with Simple Phrases

Zero-shot CoT prompting involves instructing the model to think logically without providing any examples. A simple yet highly effective approach is using reasoning cues such as:

- “Let’s think step by step.”

- “Let’s analyze this logically before answering.”

- “Break the problem down into smaller parts.”

Adding these phrases shifts the model from providing an immediate response to engaging in structured problem-solving. This technique significantly improves accuracy, especially in tasks requiring reasoning, such as math problems, logical deductions, and commonsense reasoning.

For instance, if asked, “If a train travels 60 miles in one hour, how long does it take to travel 180 miles?”, a standard prompt might give an answer like “Three hours” without explanation. However, with Zero-Shot CoT, the model breaks it down as:

- The train moves at a speed of 60 miles per hour.

- To find the time, divide the distance (180 miles) by the speed (60 miles per hour).

- The result is 3 hours.

Even though no examples were provided, the model reasoned through the problem simply by being prompted to think step by step.

2. Few-Shot CoT: Learning from Examples

Few-shot CoT prompting strengthens the model’s reasoning by providing multiple step-by-step examples before introducing a new problem. Exposing the model to structured reasoning patterns allows it to apply similar logic to new tasks.

For example, when solving a math problem, the prompt might include:

Example 1:

Question: If a car travels 50 miles in one hour, how long does it take to travel 150 miles?

Answer:

- The speed of the car is 50 miles per hour.

- To find the time, divide the total distance (150 miles) by the speed (50 miles per hour).

- 150 ÷ 50 = 3 hours.

Example 2:

Question: A factory produces 200 units per day. How many units are produced in 5 days?

Answer:

- The factory produces 1,000 units in 5 days.

- The factory’s daily production rate is 200 units.

- Multiply the daily output by the number of days (200 × 5).

New Question for the Model: If a printer prints 30 pages per minute, how many pages will it print in 4 minutes?

Because the model has seen structured examples, it is more likely to break down the problem correctly, arriving at the logical conclusion of 120 pages (30 × 4).

Few-shot CoT is particularly effective in domains requiring structured reasoning, such as mathematics, physics, legal reasoning, and natural language inference.

3. Auto-CoT: Automatically Generating Diverse Reasoning Examples

Auto-CoT takes a few-shot prompting a step further by automatically generating reasoning examples before solving a new problem. Instead of manually selecting examples, an AI system generates multiple examples dynamically, ensuring greater flexibility across different problem types.

This method follows a process like:

- Example Generation: The system generates diverse step-by-step solutions to various problems within the same domain.

- Pattern Recognition: The AI analyzes common reasoning structures.

- New Problem Application: The AI applies the reasoning techniques it learned to solve new problems.

For example, in a physics problem about force and motion, Auto-CoT might generate diverse example problems covering Newton’s Laws, projectile motion, and friction before attempting a new, unseen problem.

This technique is particularly useful for scientific reasoning, complex logic puzzles, and multi-step decision-making processes, where exposure to a variety of approaches strengthens the AI’s ability to generalize solutions.

Let’s take a look at each variant and its key benefit.

| Variant | Description | Example Usage | Key Benefit |

| Zero-Shot CoT | Uses reasoning prompts like “Let’s think step by step” without prior examples. | Quick reasoning tasks, logical deductions, commonsense questions. | Simple and effective for guiding structured thought. |

| Few-Shot CoT | Provides multiple examples of step-by-step reasoning before a new problem. | Math problems, physics questions, structured decision-making. | Helps the model recognize reasoning patterns. |

| Auto-CoT | AI generates diverse reasoning examples before solving new problems. | Scientific reasoning, legal analysis, multi-step logic tasks. | Expands adaptability across different problem types. |

Each variant of CoT prompting serves a unique role in improving the logical reasoning capabilities of AI models. The choice of technique depends on the complexity of the problem and the level of guidance required.

Benefits and Challenges of Chain of Thought (CoT) Prompting

CoT prompting is a powerful way to enhance how AI models reason and solve problems. It enables the model to carefully think through each part of a problem, improving clarity and decision-making. However, while CoT offers many advantages, it also comes with some challenges that you should be aware of when using it.

Benefits of Chain of Thought Prompting:

Chain of Thought prompting helps guide the model step by step, improving its reasoning, accuracy, and clarity in complex tasks. Let’s take a closer look at the key benefits of using CoT prompting.

- Helps You Break Down Complex Problems into Manageable Steps

- When you use CoT prompting, the AI doesn’t just jump to an answer—it works through the problem step by step. This makes it easier to handle multi-step reasoning tasks, like solving math problems, analyzing logical statements, or making well-informed decisions. Instead of struggling with complex problems all at once, you can guide the model to approach them in a structured way.

- Expands the AI’s Reasoning Abilities

- Prompting the model to think through each step logically can help it build stronger reasoning skills and produce more reliable responses. This is especially useful for tasks that require critical thinking, legal analysis, scientific explanations, or commonsense reasoning. Instead of surface-level responses, the AI engages in structured thought processes, leading to more reliable answers.

- Improves Accuracy and Logical Reasoning

- CoT prompting leads to better accuracy because the AI follows a logical sequence rather than making guesses. If you’re working on math, physics, or formal logic problems, CoT helps minimize errors by ensuring each step builds on the previous one. With this approach, the AI is less likely to produce oversimplified or incorrect responses.

Challenges and Limitations of CoT Prompting

When using Chain of Thought (CoT) prompting, you might notice a few challenges along the way. It’s helpful to understand these so you can use CoT more effectively. Here are some common limitations you need to be aware of:

- Depends on the Model’s Size and Capabilities

- The effectiveness of CoT prompting largely depends on the size and complexity of the AI model you’re working with. While larger models benefit greatly from CoT, smaller models may struggle with maintaining logical consistency over multiple steps, leading to incomplete or incorrect reasoning.

- The AI Can Still Make Logical Errors

- Even though CoT improves reasoning, it doesn’t guarantee perfect logic. The model may still misinterpret information, introduce biases, or make flawed assumptions along the way. That’s why it’s important to review and verify the AI’s outputs, especially in high-stakes areas like law, medicine, or finance.

- Scalability Issues with Smaller or Less-Powerful Models

- Since CoT prompting requires more processing power to generate step-by-step reasoning, it can slow down performance—especially in smaller models. If speed and efficiency are your main concerns, you might need to balance between detailed reasoning and quick responses, depending on the use case.

How You Can Balance These Benefits and Challenges

Despite these limitations, CoT prompting remains one of the best ways to improve AI reasoning and accuracy. If you want to maximize its potential, you can experiment with different CoT techniques like Auto-CoT or mix CoT with other prompting methods. As AI technology evolves, CoT is expected to play an even bigger role in making AI more reliable, interpretable, and useful for complex problem-solving.

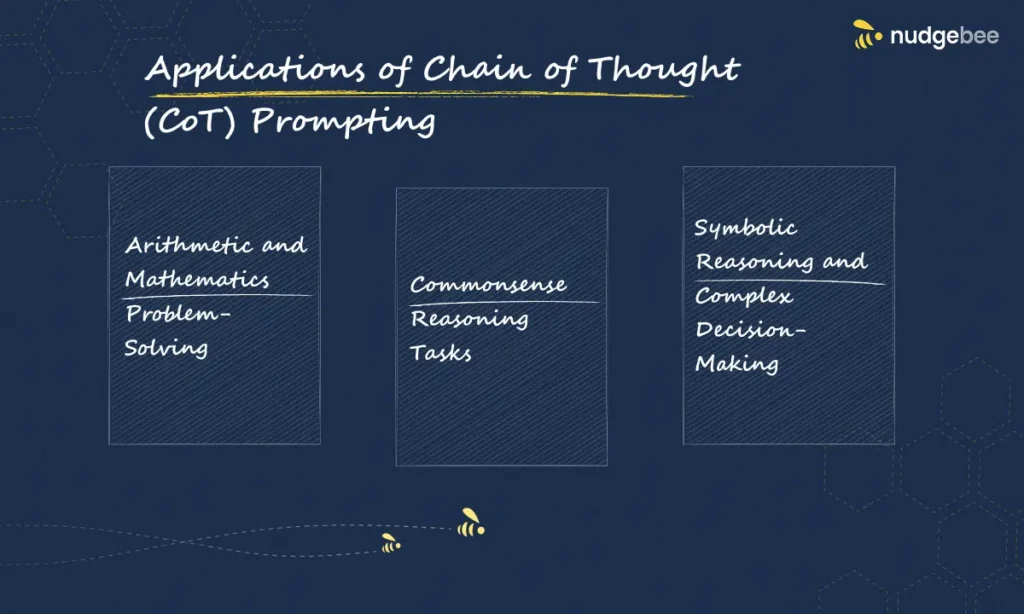

Applications of Chain of Thought (CoT) Prompting

CoT prompting has a wide range of applications across various domains, helping AI models process information more logically and systematically. It works by guiding the model to think through each step clearly, improving its performance on complex tasks that require deeper reasoning. Here are some key areas where CoT prompting proves invaluable:

1. Arithmetic and Mathematics Problem-Solving

CoT prompting significantly improves the AI’s ability to solve multi-step arithmetic and mathematical problems by guiding it through structured calculations rather than producing an immediate (and sometimes incorrect) answer.

For example, instead of simply stating the result of a math problem, CoT enables the AI to explain its reasoning:

Problem: A store sells apples for $2 each. If you buy 5 apples, how much will it cost?

CoT Response:

- Each apple costs $2.

- You are purchasing 5 apples.

- To find the total cost, multiply: 5 × 2 = $10.

- Final Answer: $10.

This method reduces errors in calculations and makes the reasoning process transparent, which is especially useful for educational tools, tutoring systems, and automated grading systems.

2. Commonsense Reasoning Tasks

AI models often struggle with commonsense reasoning, where human intuition plays a key role. CoT prompting helps bridge this gap by prompting the model to think through everyday scenarios logically.

For instance:

Question: If you leave a cup of hot coffee on the table for an hour, what will happen?

CoT Response:

- The coffee is initially hot.

- Over time, it will lose heat to the surrounding air.

- Since room temperature is lower than the coffee’s temperature, it will gradually cool down.

- Final Answer: The coffee will become cold over time.

With step-by-step reasoning, CoT enables AI to perform better in chatbots, virtual assistants, and decision-support systems that require logical thinking about real-world scenarios.

3. Symbolic Reasoning and Complex Decision-Making

CoT is highly effective for tasks that require logical deductions, symbolic reasoning, and high-level decision-making, such as:

- Legal and policy analysis: AI can analyze legal cases step by step, considering precedents and implications.

- Medical diagnosis and reasoning: AI can assess symptoms logically before suggesting possible conditions.

- Strategic planning and game theory: AI can evaluate multiple possibilities in board games, business decisions, or AI-driven simulations.

For example, in a logic puzzle:

Question: All roses are flowers. Some flowers fade quickly. Does this mean all roses fade quickly?

CoT Response:

- All roses belong to the category of flowers.

- Some flowers (but not all) fade quickly.

- The fact that some flowers fade quickly does not imply that all flowers do.

- Therefore, we cannot conclude that all roses fade quickly.

With CoT prompting, AI can provide more structured, interpretable, and reliable decision-making processes, making it useful in domains that demand high levels of reasoning and accuracy.

As AI continues to evolve, CoT will play a crucial role in fields like education, business intelligence, healthcare, and law, making AI more interpretable and human-like in its decision-making processes.

Enhancing AI-Driven Operations in Cloud Environments with Nudgebee

NudgeBee is an innovative platform that provides AI-powered agents designed to optimize and automate operations within cloud-native environments, particularly Kubernetes clusters.

By integrating NudgeBee’s specialized agents, such as the Troubleshooting Agent, FinOps Agent, and CloudOps Agent, you can significantly enhance the efficiency and reliability of your Site Reliability Engineering (SRE) and DevOps teams.

Key Features of NudgeBee:

- Troubleshooting Agent: This agent assists in rapidly identifying and resolving issues within Kubernetes clusters, reducing downtime and improving system reliability.

- FinOps Agent: Focused on financial operations, this agent provides continuous real-time optimization of cloud resources, helping to manage costs effectively.

- CloudOps Agent: Automates routine and time-consuming cloud operations, allowing your team to focus on more strategic tasks.

NudgeBee’s AI-driven agents will allow you to automate cloud operations, boost system performance, and manage costs effectively. Whether your goal is to simplify troubleshooting, enhance financial workflows, or streamline cloud management, NudgeBee delivers smart, tailored solutions for today’s dynamic cloud environments.

Conclusion

Chain of Thought (CoT) prompting is revolutionizing how AI models process and reason through complex problems. This not only improves accuracy and logical flow but also equips the model to handle a wide range of tasks, such as analytical thinking and strategic planning.

While CoT offers significant benefits, it also comes with challenges, such as dependency on model size and potential logical errors. However, with advancements in AI and innovative tools like NudgeBee, organizations can optimize AI-driven operations and improve efficiency in cloud-native environments.

Don’t let complex tasks slow you down, use CoT prompting to boost accuracy and logical reasoning in AI models. Sign up to day!